I

Size: a a a

2020 July 14

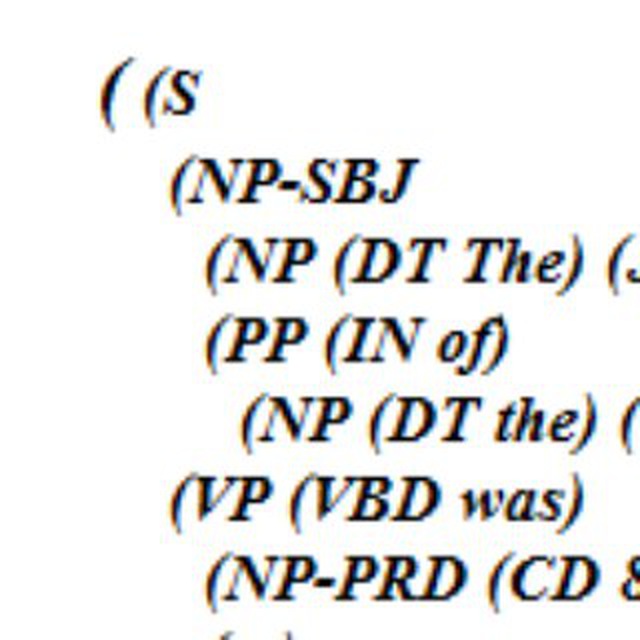

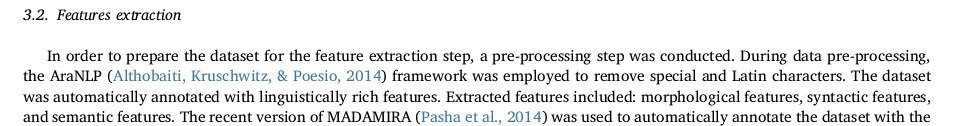

I am reading a pepers about Aspect based sentiment analysis and I know that word could be represented by word embedding ,tf-idf ,bag of words but I read in some papers they using morphological, syntactic and semantic features without any mention how they encode it what this means how they take the feature how they encode it to number if anayone know blog ,book or video or anything explain it I will be thankful

what language are you talking about?

GZ

на ум приходит такой форклоу, который кажется типичный:

1) выделяешь сущности в тексте

2) нормализуешь

3) ищешь по нормализованным

1) выделяешь сущности в тексте

2) нормализуешь

3) ищешь по нормализованным

Форклоу? А как это по английски?

Я сейчас смотрю spacy и prodigy, но не знаю, поможет он мне или нет.

Я сейчас смотрю spacy и prodigy, но не знаю, поможет он мне или нет.

p

I have a question regarding text pre processing:

1. Anyone have list to contranst lemmetisation and stemming from different libraries like NLTK , spacy , keras.preprocessing and others .

2 . How should we decide that which tool will be best suited for the task

3. Does working with deep learning models require preprocessing ?( I am still new to seq2seq models Any certain practices to keep in mind for the above said task .

thank you.

1. Anyone have list to contranst lemmetisation and stemming from different libraries like NLTK , spacy , keras.preprocessing and others .

2 . How should we decide that which tool will be best suited for the task

3. Does working with deep learning models require preprocessing ?( I am still new to seq2seq models Any certain practices to keep in mind for the above said task .

thank you.

p

I have a question regarding text pre processing:

1. Anyone have list to contranst lemmetisation and stemming from different libraries like NLTK , spacy , keras.preprocessing and others .

2 . How should we decide that which tool will be best suited for the task

3. Does working with deep learning models require preprocessing ?( I am still new to seq2seq models Any certain practices to keep in mind for the above said task .

thank you.

1. Anyone have list to contranst lemmetisation and stemming from different libraries like NLTK , spacy , keras.preprocessing and others .

2 . How should we decide that which tool will be best suited for the task

3. Does working with deep learning models require preprocessing ?( I am still new to seq2seq models Any certain practices to keep in mind for the above said task .

thank you.

for English language .

I

Форклоу? А как это по английски?

Я сейчас смотрю spacy и prodigy, но не знаю, поможет он мне или нет.

Я сейчас смотрю spacy и prodigy, но не знаю, поможет он мне или нет.

сорян. воркфлоу. workflow. ну короче "алгоритм"

NG

what language are you talking about?

any language ,The paper about Arabic but I want to understand this for any language

I

any language ,The paper about Arabic but I want to understand this for any language

I guess you speak about this article: https://www.sciencedirect.com/science/article/abs/pii/S0306457316305623

I

So, I see authors use tool named AraNLP

NG

I am reading the same paper but because I dont have good background I dont know how the converting the features to numbers

NG

Thank you a lot and I am sorry for the annoying 🙈

2020 July 15

I

I am reading the same paper but because I dont have good background I dont know how the converting the features to numbers

Ok. I got it.

I think you need to read something fairly basic. This raises the question of which pipeline you want to use. Roughly speaking, shallow models or transfer learning... Although, probably, in both cases, features will need to be encoded using OHE. I'll try to find some tutorial now.

I think you need to read something fairly basic. This raises the question of which pipeline you want to use. Roughly speaking, shallow models or transfer learning... Although, probably, in both cases, features will need to be encoded using OHE. I'll try to find some tutorial now.

NG

Ok. I got it.

I think you need to read something fairly basic. This raises the question of which pipeline you want to use. Roughly speaking, shallow models or transfer learning... Although, probably, in both cases, features will need to be encoded using OHE. I'll try to find some tutorial now.

I think you need to read something fairly basic. This raises the question of which pipeline you want to use. Roughly speaking, shallow models or transfer learning... Although, probably, in both cases, features will need to be encoded using OHE. I'll try to find some tutorial now.

Yes This what I want thank you

D

Доброе утро, возник такой вопрос:

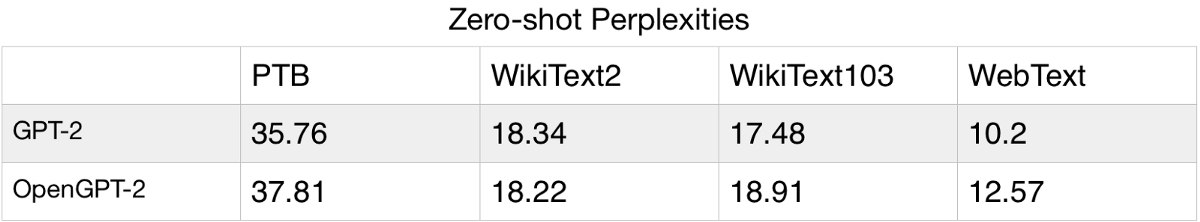

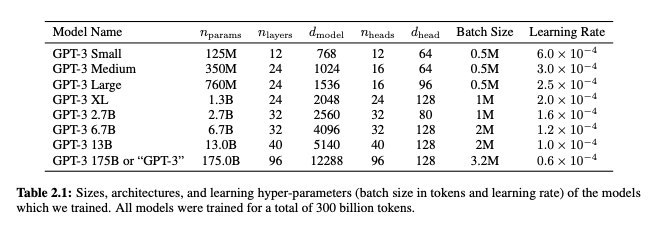

насколько возможно вообще в принципе на сегодняшний день сделать реплику GPT-3 (для не дата саентистов)?

Вот на примере второго https://blog.usejournal.com/opengpt-2-we-replicated-gpt-2-because-you-can-too-45e34e6d36dc

насколько возможно вообще в принципе на сегодняшний день сделать реплику GPT-3 (для не дата саентистов)?

Вот на примере второго https://blog.usejournal.com/opengpt-2-we-replicated-gpt-2-because-you-can-too-45e34e6d36dc

t

Доброе утро, возник такой вопрос:

насколько возможно вообще в принципе на сегодняшний день сделать реплику GPT-3 (для не дата саентистов)?

Вот на примере второго https://blog.usejournal.com/opengpt-2-we-replicated-gpt-2-because-you-can-too-45e34e6d36dc

насколько возможно вообще в принципе на сегодняшний день сделать реплику GPT-3 (для не дата саентистов)?

Вот на примере второго https://blog.usejournal.com/opengpt-2-we-replicated-gpt-2-because-you-can-too-45e34e6d36dc

вопрос в доступности алгоритмов или железа? 🙂

D

тобишь сделать реплику приемлимой золотой середины между 13B и 175B

D

вопрос в доступности алгоритмов или железа? 🙂

всего вышеперечисленного

t

алгоритмы они вроде бы не меняли, архитектура открытая, они просто настакали слоев еще больше и контекст расширили

t

а железо... вопрос в бюджете ¯\_(ツ)_/¯

t

было бы у меня столько денег, сколько у них, наверное, я бы тоже gpt3 от скуки тренил