IP

Size: a a a

2019 May 16

3 фича отмечена как Good

да, но ты говоришь, что фичи оцениваются только по pvalue

AD

отсечение по 0.01

SZ

from catboost.eval.evaluation_result import *

logloss_result = result.get_metric_results('Logloss')

logloss_result.get_baseline_comparison(

ScoreConfig(ScoreType.Rel, overfit_iterations_info=False)

)

Спасибо

AD

статзначимость оценивается по pvalue

AD

[good or bad] vs unknown

AD

good и bad - решение принимается по скору

IP

good и bad - решение принимается по скору

вот это и хотел узнать

IP

@annaveronika спасибо

P🐈

Если у меня в датасете несколько таргетов (колонок), то как проще всего предсказывать их всех? На каждый делать по модели?

P🐈

Природа этих таргетов разная, но они скоррелированы.

Bo

Если у меня в датасете несколько таргетов (колонок), то как проще всего предсказывать их всех? На каждый делать по модели?

У тебя каждая колонка в датасете это по очереди целевая переменная?

P🐈

Есть фичёвые колонки, есть целевые, да

AD

Если у меня в датасете несколько таргетов (колонок), то как проще всего предсказывать их всех? На каждый делать по модели?

Да, только так

2019 May 17

AD

Мы теперь есть в слаке: русскоязычный opendatascience (#tool_catboost) и англоязычный dscommunity (#catboost). Приглашаем туда любителей слаковских тредов! В англоязычном слаке канал приватный, но можно меня попросить, добавлю туда.

Регаться тут: https://ods.ai (Присоединиться) и тут https://app.dataquest.io/chat

Регаться тут: https://ods.ai (Присоединиться) и тут https://app.dataquest.io/chat

AV

AV

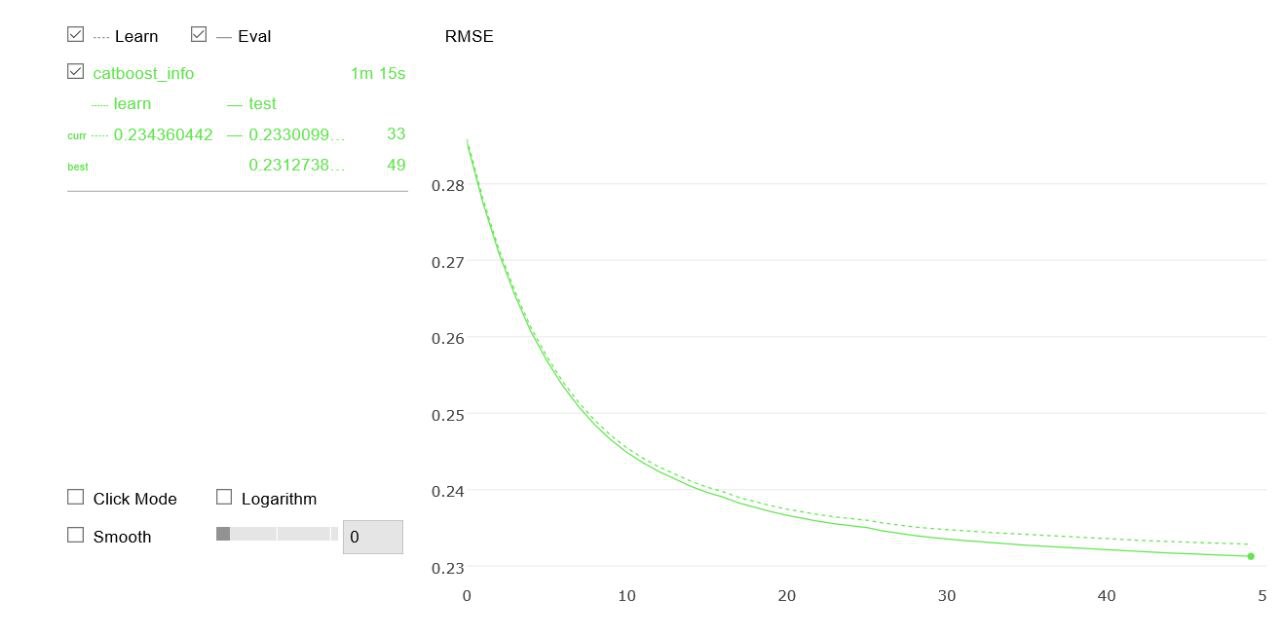

Скажите, пожалуйста, почему при трейне может быть такая ситуация как на картинке: rmse на тесте постоянно ниже чем на трйне

AD

Спасибо за отличный вопрос, скоро мы выложим FAQ, где будет на него ответ.

Why is metric value on validation dataset sometimes better than one on training dataset.

This happens because auto-generated numerical features that are based on categorical features are calculated differently for training dataset and for validation dataset.

For training dataset the feature is calculated differently for every object in the dataset. For object i feature is calculated based on data from first i-1 objects (the first i-1 objects in some random permutation).

For validation dataset the same feature is calculated using data from all objects of the training dataset.

The feature that is calculated using data from all objects of the training dataset, uses more data, then the feature, that is calculated only on on part of the dataset. For this reason this feature is more powerful. A more powerful feature results in a better loss value.

Thus, loss value on the validation dataset might be better then loss value for training dataset, because validation dataset has more powerful features.

The algorithm, that represents how auto-generated numerical features are calculated, and theoretical foundations for them are described in the following papers:

https://tech.yandex.com/catboost/doc/dg/concepts/educational-materials-papers-docpage/ (the first two papers) and here https://tech.yandex.com/catboost/doc/dg/concepts/educational-materials-videos-docpage/ (the second video).

Why is metric value on validation dataset sometimes better than one on training dataset.

This happens because auto-generated numerical features that are based on categorical features are calculated differently for training dataset and for validation dataset.

For training dataset the feature is calculated differently for every object in the dataset. For object i feature is calculated based on data from first i-1 objects (the first i-1 objects in some random permutation).

For validation dataset the same feature is calculated using data from all objects of the training dataset.

The feature that is calculated using data from all objects of the training dataset, uses more data, then the feature, that is calculated only on on part of the dataset. For this reason this feature is more powerful. A more powerful feature results in a better loss value.

Thus, loss value on the validation dataset might be better then loss value for training dataset, because validation dataset has more powerful features.

The algorithm, that represents how auto-generated numerical features are calculated, and theoretical foundations for them are described in the following papers:

https://tech.yandex.com/catboost/doc/dg/concepts/educational-materials-papers-docpage/ (the first two papers) and here https://tech.yandex.com/catboost/doc/dg/concepts/educational-materials-videos-docpage/ (the second video).

AV

А усреднение по нескольким пермутациям при расчете числовых признаков на трейне не делается? На первый взгляд это изменило бы ситуацию?

AD

Усреднение нигде не делается, его и не надо делать. Можно было бы считать во время обучения еще и лосс на модели, которая будет, но для этого надо считать ее значения на каждом объекте во время обучения, и хранить все это в памяти. Это долго, занимает память, и не нужно никому.