AM

Size: a a a

2017 May 30

По идее

ὦ

Даже после добавления памяти он все равно падает

ὦ

с offHeap ошибкой

AM

Ну, про что я и говорю. Нужно найти опцию юзать диск при нехватке памяти

ὦ

Ну, про что я и говорю. Нужно найти опцию юзать диск при нехватке памяти

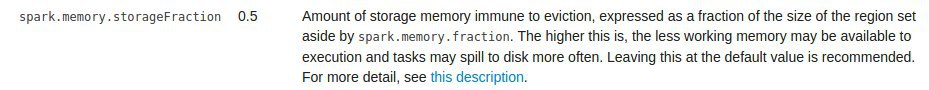

Видимо это

AM

Эта штука делит хип твой на пропорции для спарка и для твоего кода

AM

Ой - перепутал с другой опцией

ὦ

я вот прочитал что tasks may split to disk often

AM

Sometimes, you will get an OutOfMemoryError not because your RDDs don’t fit in memory, but because the working set of one of your tasks, such as one of the reduce tasks in groupByKey, was too large. Spark’s shuffle operations (sortByKey, groupByKey, reduceByKey, join, etc) build a hash table within each task to perform the grouping, which can often be large. The simplest fix here is to increase the level of parallelism, so that each task’s input set is smaller. Spark can efficiently support tasks as short as 200 ms, because it reuses one executor JVM across many tasks and it has a low task launching cost, so you can safely increase the level of parallelism to more than the number of cores in your clusters.

AM

В доке написано

ὦ

Ага

ὦ

Значит нужно level of parallelism поднять

AM

Это может и не помочь

ὦ

время попробовать

AM

На мой взгляд лучше тебе ограничить набор данных. Помнишь опцию sample?

AM

Попробуй поиграться с ее значением

AM

Например 0.01 - 1% датасета

AM

Вообще спарк обычно запускает на тоннах памяти(n-ом количестве машин) и неудивительно, что твоих 8 не хватает