k

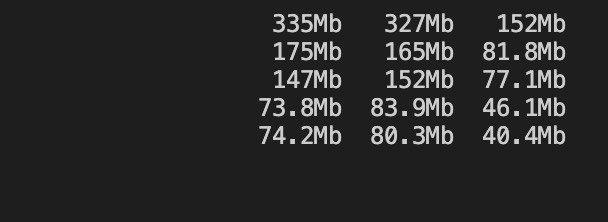

Size: a a a

2020 June 02

перетестил, уходит траф на другие хосты при ревейте. ночью смотрел на паб сеть вместо привата, вот и не увидел изменений )))) спать видимо ночью надо )

Надо. А ты в бане))

AM

ceph osd rewight 0 0.1

ВН

перетестил, уходит траф на другие хосты при ревейте. ночью смотрел на паб сеть вместо привата, вот и не увидел изменений )))) спать видимо ночью надо )

ceph pg ls remapped, зачем трафик то

AM

ну ремапнуться может в рамках хоста

AM

с осд на осд

AM

это к вопросу о том что ревейт действет не только в рамках хоста

ВН

это к вопросу о том что ревейт действет не только в рамках хоста

ревейт оставляет данные в пределах хоста, может просто ребаланс идёт всегда через другие хосты

ВН

т.е. нет же такой гарантии что данные на ремапнутый осд поедут _только_с_предыдущего_

AM

ревейт оставляет данные в пределах хоста, может просто ребаланс идёт всегда через другие хосты

ну вот нет ))) ща наскриню

AM

чуть дольше займет

ВН

ну вот нет ))) ща наскриню

ну тогда документация пиздит значит, фиг ли 😊

AM

ну вот нет ))) ща наскриню

хотя нет не накриню )))

N

Для тех кто жить не может без кубера и ищет приключений

https://habr.com/ru/company/flant/blog/477680/

https://habr.com/ru/company/flant/blog/477680/

Насколько я понимаю вот одна из причин произошедшего: https://github.com/rook/rook/issues/4274

N

Комментарии разработчика:

"k8s API must have returned NotFound for the secret (see here) where the basic cluster info is stored. If the secret is not found, Rook will assume that it's a new cluster and create new creds here. The killer here is if the k8s API is returning invalid responses, the operator will do the wrong thing. If K8s is returning invalid responses to the caller instead of failure codes, we really need a fix from K8s or else a way to know when the K8s API is unstable."

"Kubernetes as a distributed application platform must be able to survive network partitions and other temporary or catastrophic events. It's based on etcd for its config store for this reason. If there is ever a loss of quorum, etcd will halt and the cluster should stop working. Similarly, Ceph is also designed to halt if the cluster is too unhealthy, rather than continuing and corrupting things.

Is there a possibility that your K8s automation is reseting K8s in some way that would be causing this? I haven't heard of others experiencing this issue. This corruption is completely unexpected from K8s. Otherwise, Rook or other applications can't rely on it as a distributed platform."

Комментарии отрепортившего баг:

"I believe if either of those two things behaved differently, similar stories to ours wouldn't be popping up out there in the k8s world:

https://medium.com/flant-com/rook-cluster-recovery-580efcd275db

I didn't see any root cause in that blog post but I'm fairly certain their cluster disappeared due to a similar situation with his control plane. We've seen it happen twice now in 2 different clusters."

Это та же статья Flant, но на английском. В совокупности о проблеме набирается три сообщения, если считать статью Flant за одно из них.

"k8s API must have returned NotFound for the secret (see here) where the basic cluster info is stored. If the secret is not found, Rook will assume that it's a new cluster and create new creds here. The killer here is if the k8s API is returning invalid responses, the operator will do the wrong thing. If K8s is returning invalid responses to the caller instead of failure codes, we really need a fix from K8s or else a way to know when the K8s API is unstable."

"Kubernetes as a distributed application platform must be able to survive network partitions and other temporary or catastrophic events. It's based on etcd for its config store for this reason. If there is ever a loss of quorum, etcd will halt and the cluster should stop working. Similarly, Ceph is also designed to halt if the cluster is too unhealthy, rather than continuing and corrupting things.

Is there a possibility that your K8s automation is reseting K8s in some way that would be causing this? I haven't heard of others experiencing this issue. This corruption is completely unexpected from K8s. Otherwise, Rook or other applications can't rely on it as a distributed platform."

Комментарии отрепортившего баг:

"I believe if either of those two things behaved differently, similar stories to ours wouldn't be popping up out there in the k8s world:

https://medium.com/flant-com/rook-cluster-recovery-580efcd275db

I didn't see any root cause in that blog post but I'm fairly certain their cluster disappeared due to a similar situation with his control plane. We've seen it happen twice now in 2 different clusters."

Это та же статья Flant, но на английском. В совокупности о проблеме набирается три сообщения, если считать статью Flant за одно из них.

L

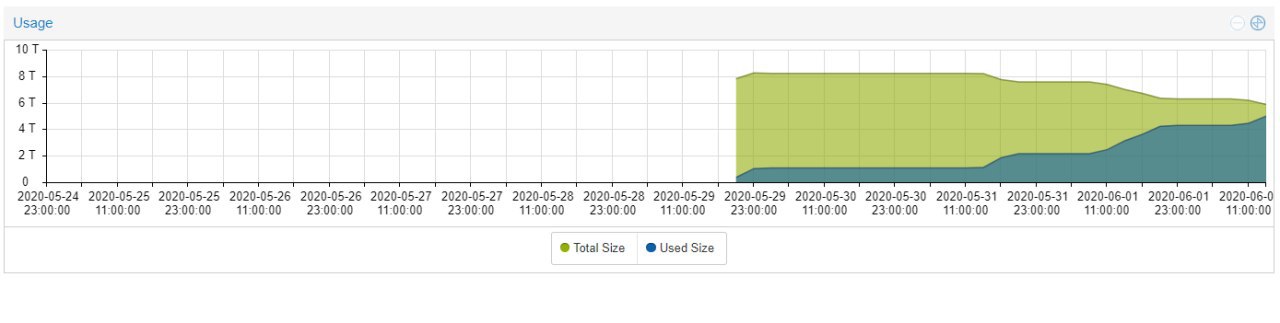

А кто может объяснить вот такое поведение ceph'а?

L

Почему по мере заполнения уменьшается размер пула?

Г

Почему по мере заполнения уменьшается размер пула?

raw поди считаешь?

L

Proxmox считает. Возможно, ошибочно.

ВН

Proxmox считает. Возможно, ошибочно.

Ну вот для этого надо понять как он вообще считает, и тогда скорее всего ответ сам собой возникнет)

L

Ну вот для этого надо понять как он вообще считает, и тогда скорее всего ответ сам собой возникнет)

Полагаю, данные о занятом и свободном пространстве выдергиваются данным куском кода. Кто в perl умеет? Расскажите что происходит)) Пробнул посмотреть на

rados df --format json - там все в порядке с размером пулаsub status {

my ($class, $storeid, $scfg, $cache) = @_;

my $rados = &$librados_connect($scfg, $storeid);

my $df = $rados->mon_command({ prefix => 'df', format => 'json' });

my ($d) = grep { $_->{name} eq $scfg->{pool} } @{$df->{pools}};

# max_avail -> max available space for data w/o replication in the pool

# bytes_used -> data w/o replication in the pool

my $free = $d->{stats}->{max_avail};

my $used = $d->{stats}->{stored} // $d->{stats}->{bytes_used};

my $total = $used + $free;

my $active = 1;

return ($total, $free, $used, $active);

}